A Simple Virtual Staging Pipeline

A simple virtual staging pipeline based on Stable Diffusion trained in Instruct Pix2Pix fashion with a paired image dataset

Introduction

This project tackles the training of an image2image model that can perform virtual staging. The dataset was a set of 500 paired empty/staged room pairs which allowed us to train our own model.

Disclaimer : This project was done in May 2024 and uses Stable Diffusion 1.5 as base model. Nowadays much better results can be achieved with out of the box image editing models such as Flux, Qwen Image or NanoBanana. However, this type of performance was not possible at the time of this project.

Method

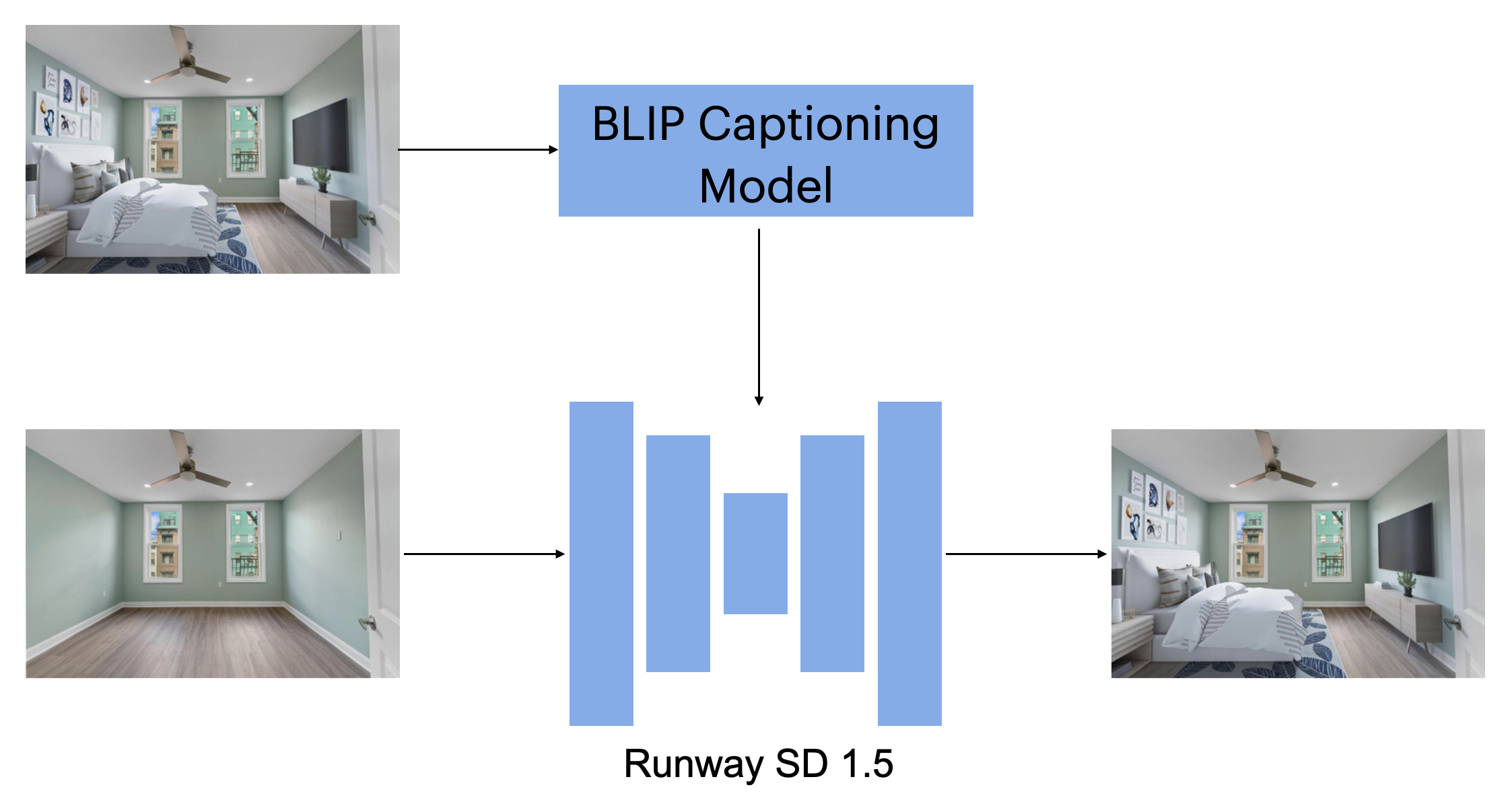

After trying some off the shelf methods based on image2image and inpainting which did not work so well, we opt for a simple approach inspired by InstructPix2Pix due to the availability of paired data which is very similar to the InstructPix2Pix paper. Because of that, we use an image captioning model (BLIP) to caption the staged pair of the images.

We show an overview of the simple training pipeline below :

In order to fit the model on a 4090, we choose to use SD1.5 by runway as the base model and train a lora in instruct pix2pix style given the pair of empty and staged images and the caption extracted by blip image captioning. We train the model for about 5000 steps which takes about 2-3 hours.

At inference time we simply prompt the model with the empty image and give it a suitable prompt for the given room type (e.g. living room, bed room, etc.) to generate a staged room. The generation of a single image takes about 4 seconds when using 100 steps for generation.

Visual Results

References

- Rombach, R., Blattmann, A., Lorenz, D., Esser, P., & Ommer, B. (2022). High-Resolution Image Synthesis with Latent Diffusion Models. CVPR.

- Brooks, T., Holynski, A., & Efros, A. A. (2023). InstructPix2Pix: Learning to Follow Image Editing Instructions. CVPR.

- Li, J., Li, D., Xiong, C., & Hoi, S. (2022). BLIP: Bootstrapping Language-Image Pre-training for Unified Vision-Language Understanding and Generation. ICML.