Training-Free 360° Panorama Generation

CodeProgressive inpainting via equirectangular projection based on Meta's 2025 ICCV paper "A recipe for generating 3D worlds from a single image"

This project implements a training-free 360° panorama generation pipeline using equirectangular projection and progressive inpainting, based on the Meta 2025 paper “A Recipe for Generating 3D Worlds From a Single Image”.

Method Overview

The approach uses a progressive inpainting strategy with equirectangular projection to generate complete 360° panoramas from text prompts or input images.

Panorama Synthesis Process

1. Equirectangular Projection

The input perspective image is embedded into an equirectangular panorama by converting pixel coordinates to spherical coordinates ($\theta$, $\phi$) and then to equirectangular coordinates.

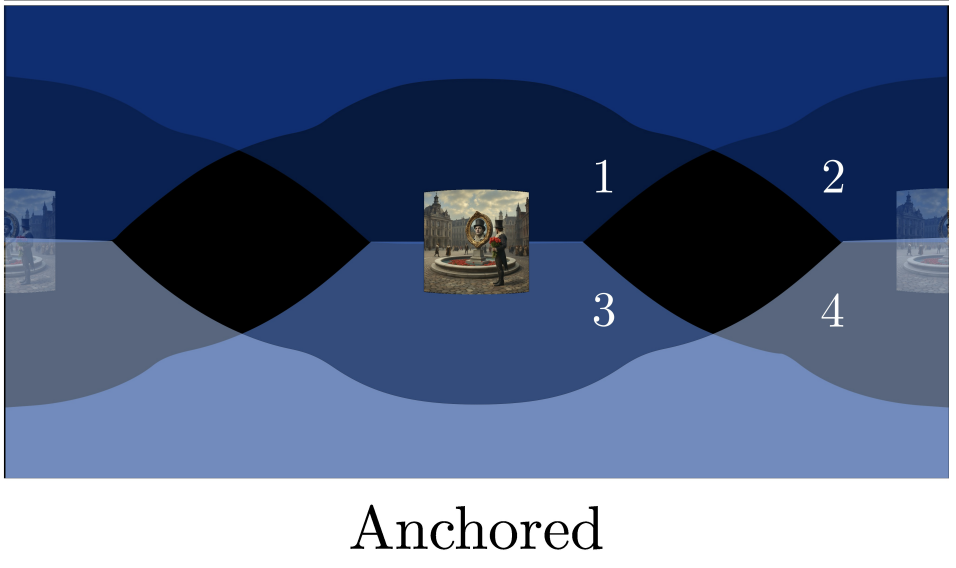

2. Progressive Inpainting - “Anchored” Strategy

The method implements an “Anchored” synthesis strategy:

- Step 1: Input image is duplicated to the backside of the panorama to anchor the synthesis

- Step 2: Separate prompts are generated for sky and ground regions using a vision-language model

- Step 3: Synthesis begins with sky and ground generation to maximize global context

- Step 4: Backside anchor is removed and remaining regions are generated by rendering and outpainting perspective images

- Multiple overlapping perspective views are rendered:

- 8 images with 85° FoV for middle region

- 4 images each with 120° FoV for top/bottom regions

3. Inpainting Network

Uses a ControlNet-based inpainting model conditioned on masked input images, based on FLUX-ControlNet-Inpainting.

4. Refinement

Optional partial denoising process is applied to improve image quality and ensure smooth transitions between inpainted regions.

Results

The method successfully generates high-quality 360° panoramas with smooth transitions between regions. The progressive inpainting strategy ensures global consistency while maintaining local detail.

Note: Due to consecutive creation of multiple images to fill the equirectangular 360° layout, generation takes several minutes (multiple inference runs of the FLUX-Dev Inpainting ControlNet model).

Summary

The approach enables the generation of 360 panoramas by utilizing the equirectangular mapping and inpainting networks in a training-free fashion, enabling the use of large scale T2I networks without training on panorama images. The generated panoramas can also scale past the

Technical Details

The implementation follows the panorama generation method described in the paper. Key technical components include:

- Equirectangular coordinate transformations

- Progressive region-based inpainting with overlapping views

- ControlNet-based inpainting for seamless blending

- Optional refinement via partial denoising

References

- Schwarz, Katja, et al. “A Recipe for Generating 3D Worlds From a Single Image.” International Conference on Computer Vision (ICCV), 2025, https://arxiv.org/abs/2503.16611.