Few Shot Robot Grasping with Dinov2 Backbone

CodeA method for data efficient top down robot grasp detection with Dinov2

This project was done in the summer/fall of 2023.

Method

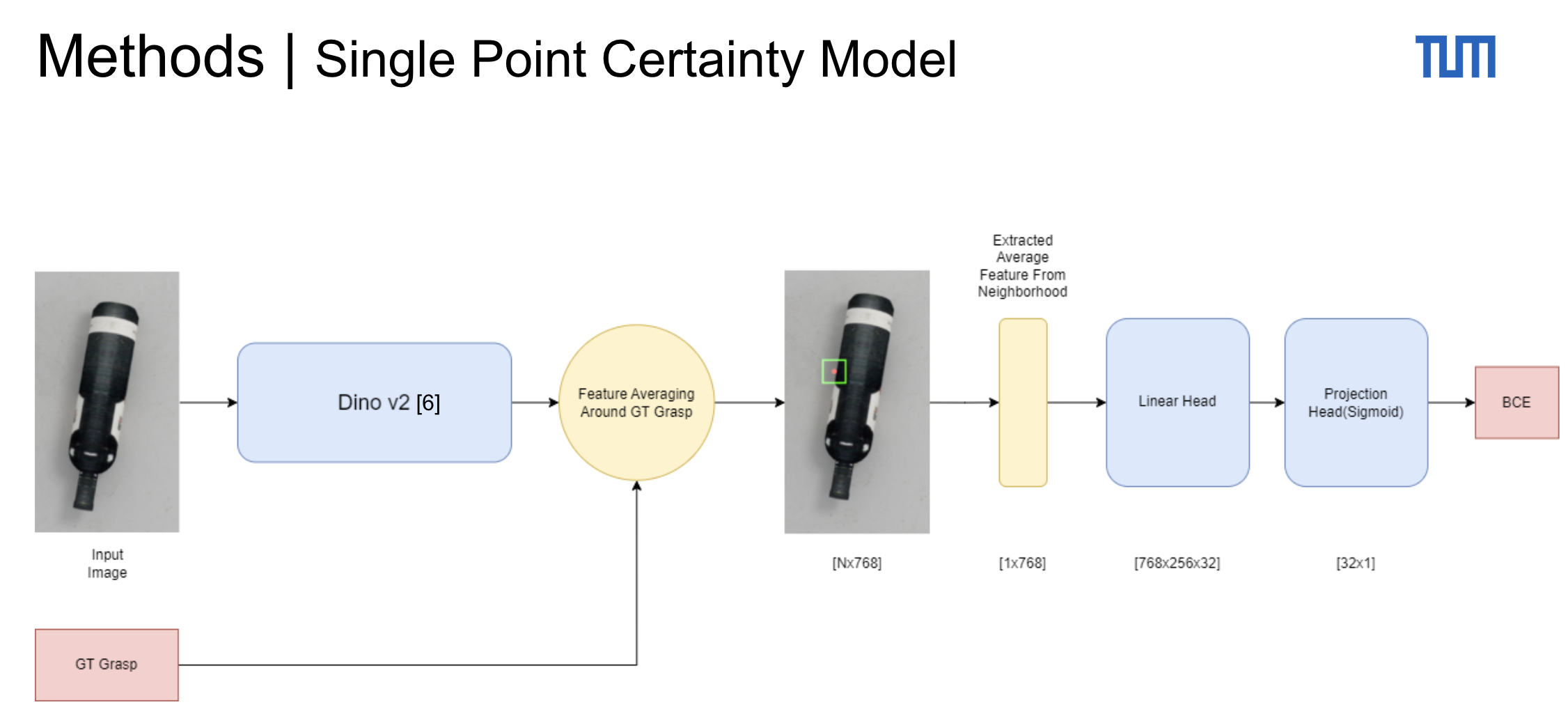

Vision foundation models such as Dinov2 have shown promising performance in various computer vision tasks, most noteably Zero-Shot Category-Level Object Pose Estimation (ECCV 2022) demonstrated that Dinov2 features can be combined with a cyclical distance to perform robust zero shot category level pose estimation. Inspired by this work, we investigate if dinov2 features can be used to perfrom data efficient keypoint detection for the robotic grasping domain. The core idea of this project it to use Dinov2 features in order to perform few shot, category level object grasping of previously unseen objects within the same category. We propose a pipeline that trains on top of frozen Dinov2 features and demonstrates superior performance to the baseline method Grasp Keypoint Network (GKNet) in the data constraint setting. The pipeline consists of a two stage procedure. First a heat-map based model is used to detect the most certain first grasp point, an overview of this part of the pipeline is seen below :

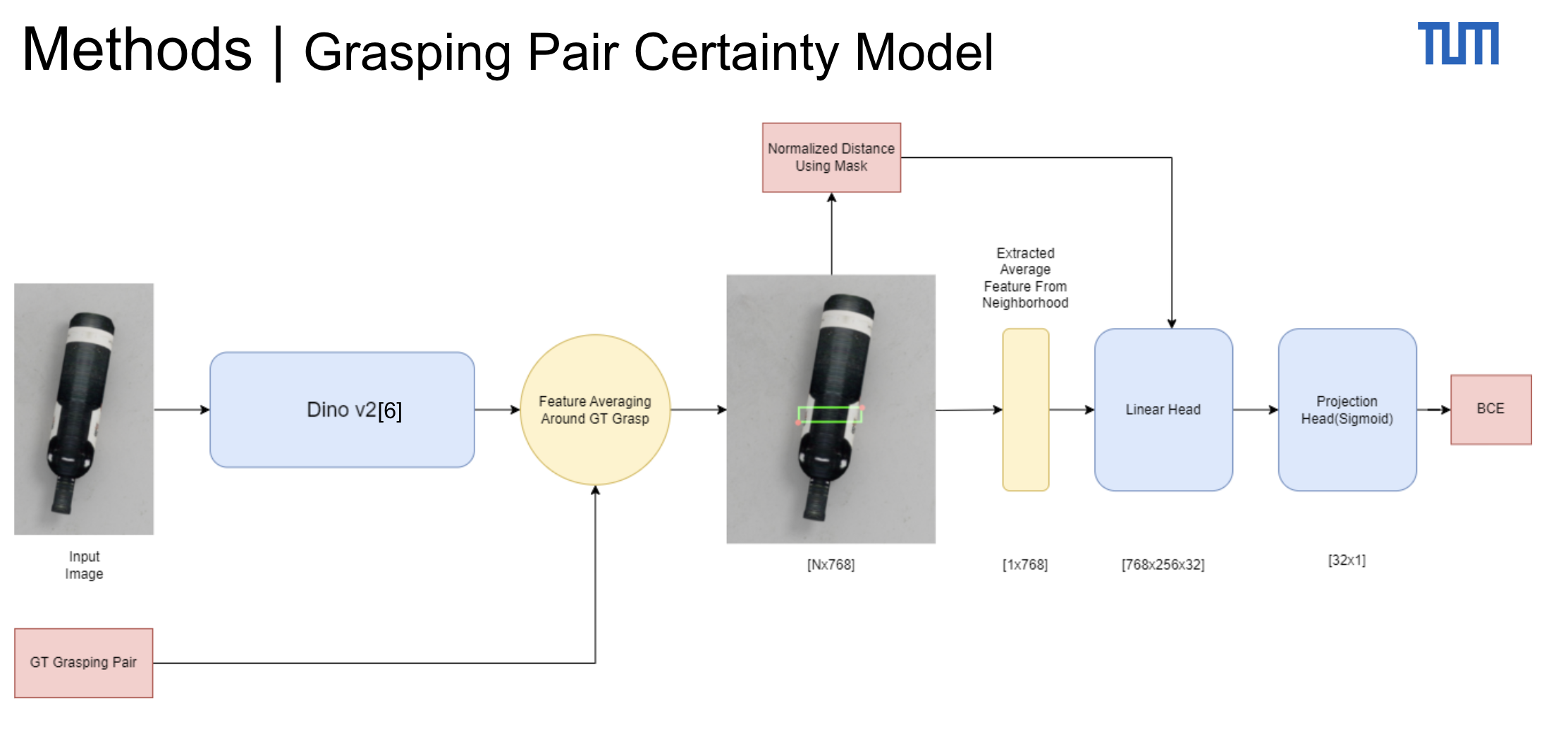

Based on this approach, we further train a second model that can predict a second point given the first point as an input. Similar to the single point prediction this is trained as a heatmap model as this demonstrated superior performance in our case.

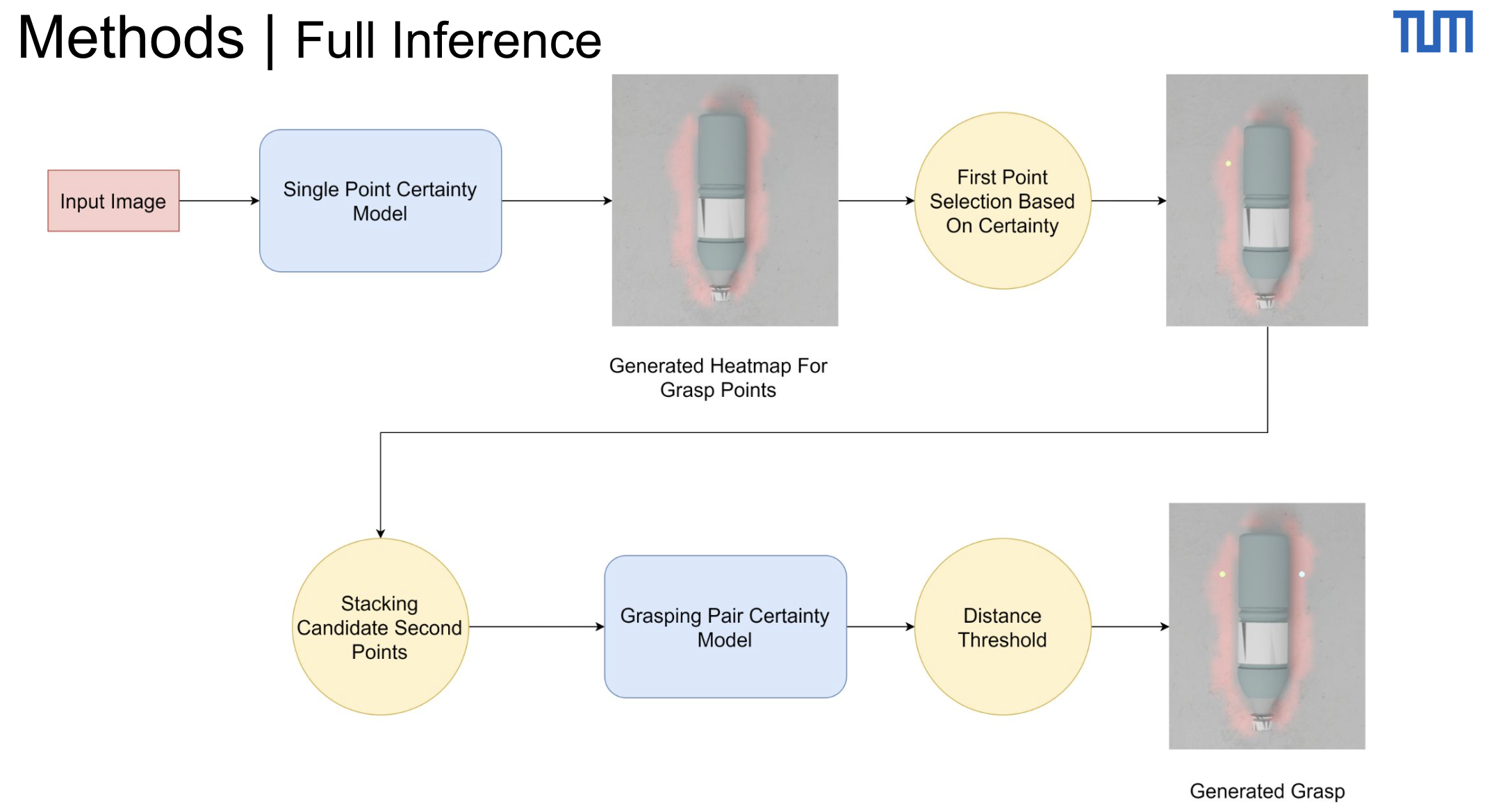

At inference time, these two models are then assembled to perform a full 2 point grasp prediction as shown below.

Results

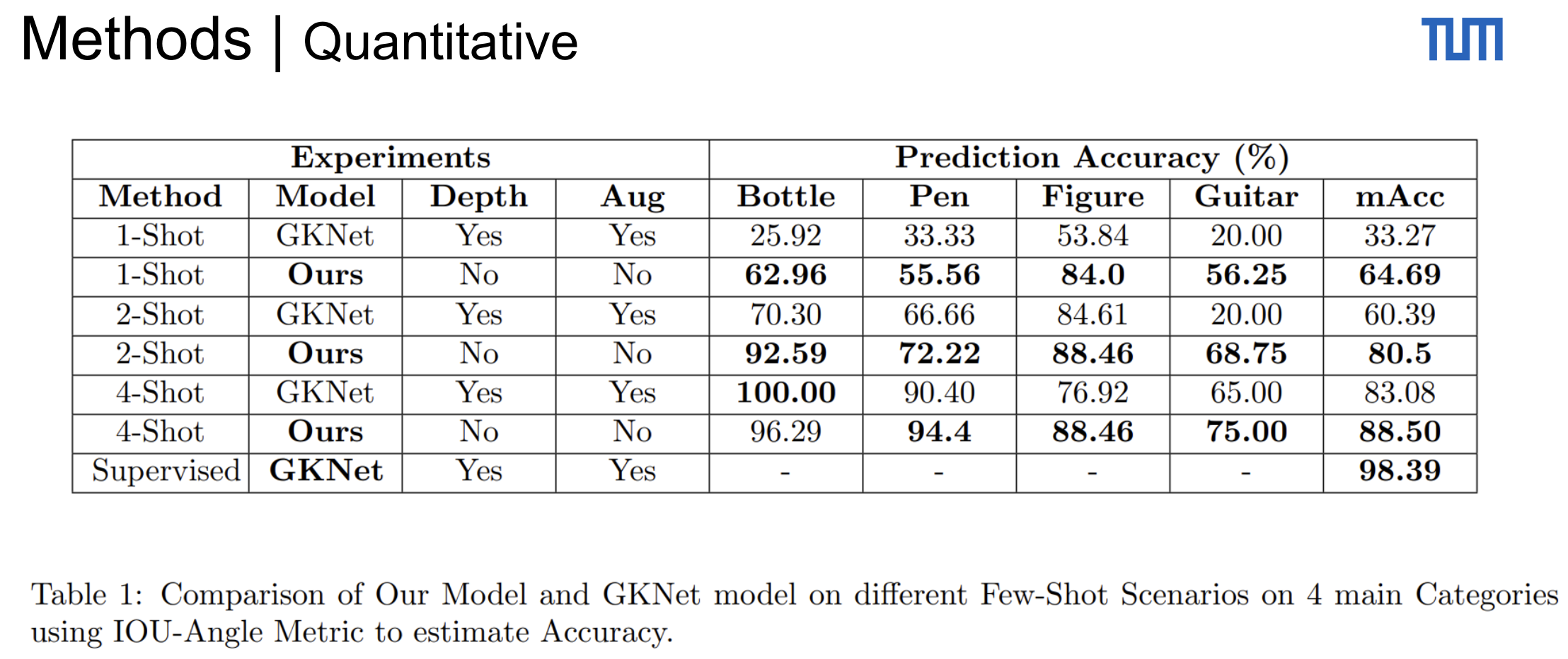

Quantitative

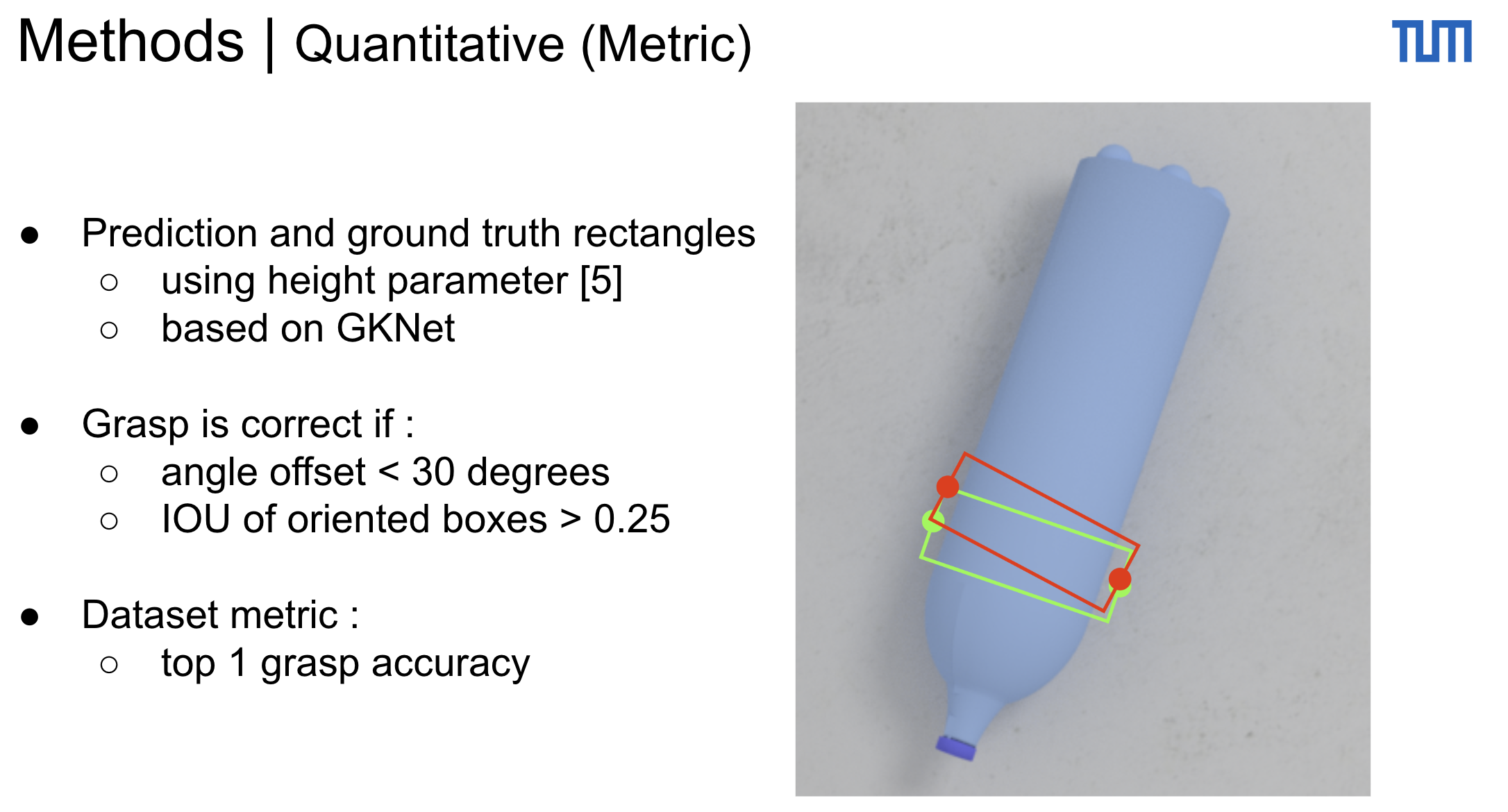

We categorize the Jacquard dataset into 4 main categories with around 100 different objects each. For our metrics we use the standard metrics proposed in the Jacquard dataset which are based on the angle offset of the grasp and the iou between height boxes which are constructed from the two grasp points and a fixed height parameter provided by the datasedm which is based on the gripper size.

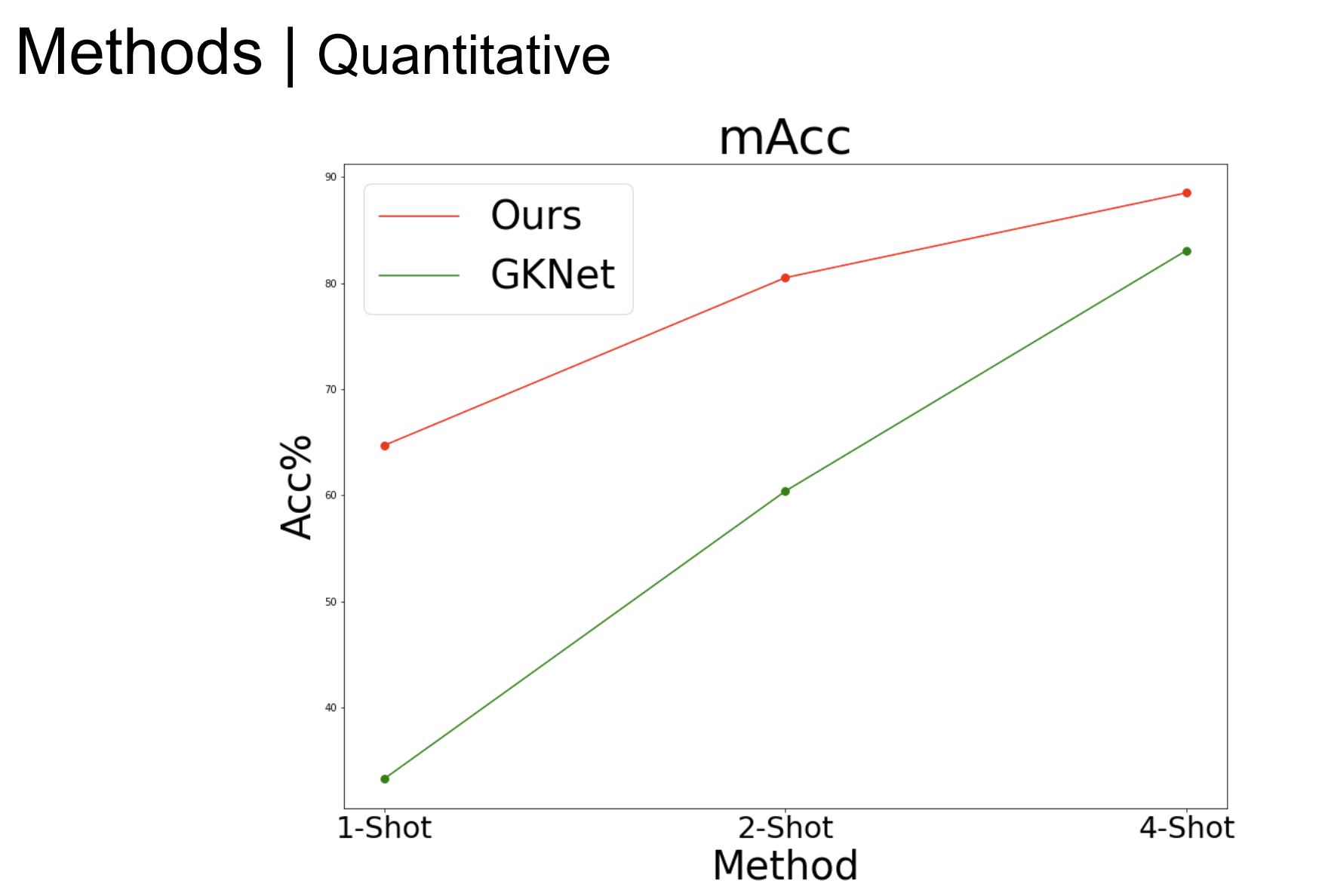

We then train our model and GKNet on 1-4 objects with their corresponding grasps and evaluate on the rest of the per category data. Our results demonstrate that our strong backbone Dinov2 features allow us to significantly outperform the GKNet method in the few shot setting.

We further average the results over all categories. While we still outperform, we see that the gap closes as more training data is used. We note that our models ability to specialize for the given task has to be learned in the head of the model as the Dino VIT backbone features are kept frozen in all training runs.

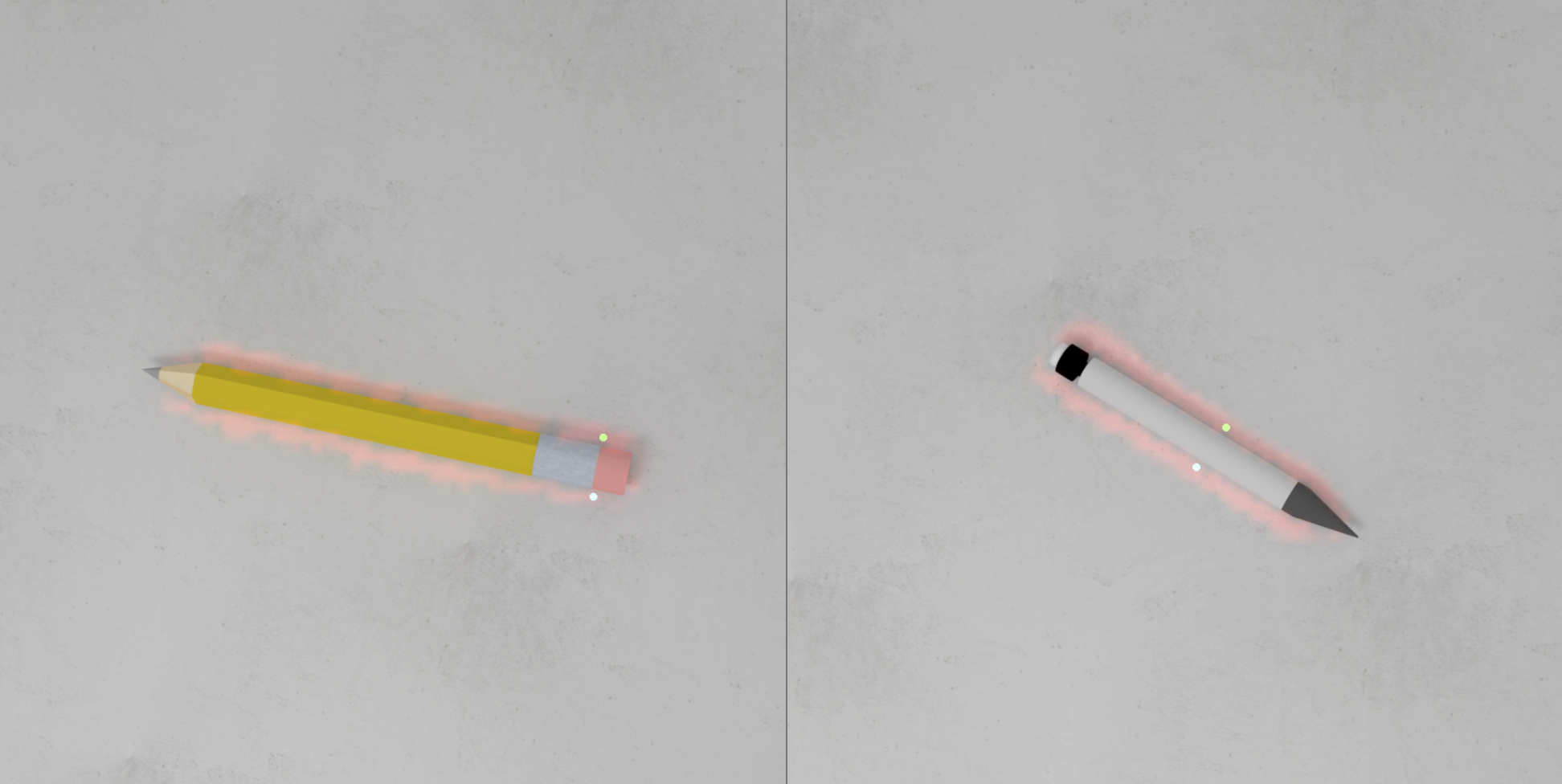

Qualitative

We visualise predictions on unseen objects down below, demonstrating the effectiveness of our method.

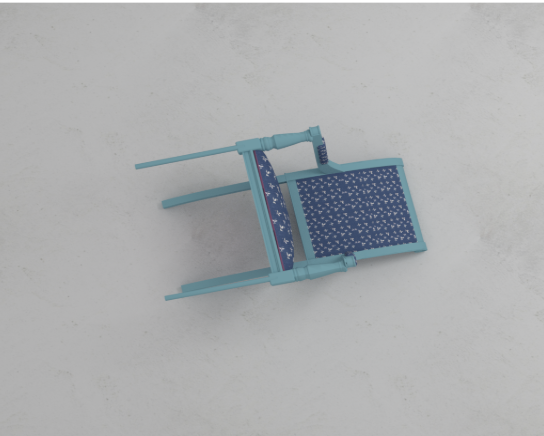

Limitations and Possibel Improvements

Due to the 2D nature of our method, we are limited to objects that are “flat” from the birds eye view. In order to predict grasping points for more difficult 3D structures such as the chair object seen below, we propose to further extend the approach to incooperate the depth maps from the dataset.

References

- Goodwin, Walter, et al. “Zero-Shot Category-Level Object Pose Estimation.” Proceedings of the European Conference on Computer Vision (ECCV), 2022.

- Xu, Ruinian, Fu-Jen Chu, and Patricio A. Vela. “GKNet: Grasp Keypoint Network for Grasp Candidates Detection.” The International Journal of Robotics Research, 2022.

- Oquab, Maxime, et al. “DINOv2: Learning Robust Visual Features without Supervision.” International Conference on Learning Representations (ICLR), 2025.